Going to jail

Matching blog post to Going to jail @ RKey.Tech/Docs/

Getting thrown in jail

This was a fairly stressful venture as I did it while at my day job working as a medical receptionist (I’ve been unsuccessful so far, getting back into IT after being laid off). So this was done while answering phones, booking appointments, and checking clients out. Needless to say the stress level was a bit high for making a mistake there or here. But I’m addicted to IT and technology so I have to play 24/7/365 or I will probably just die of boredom.

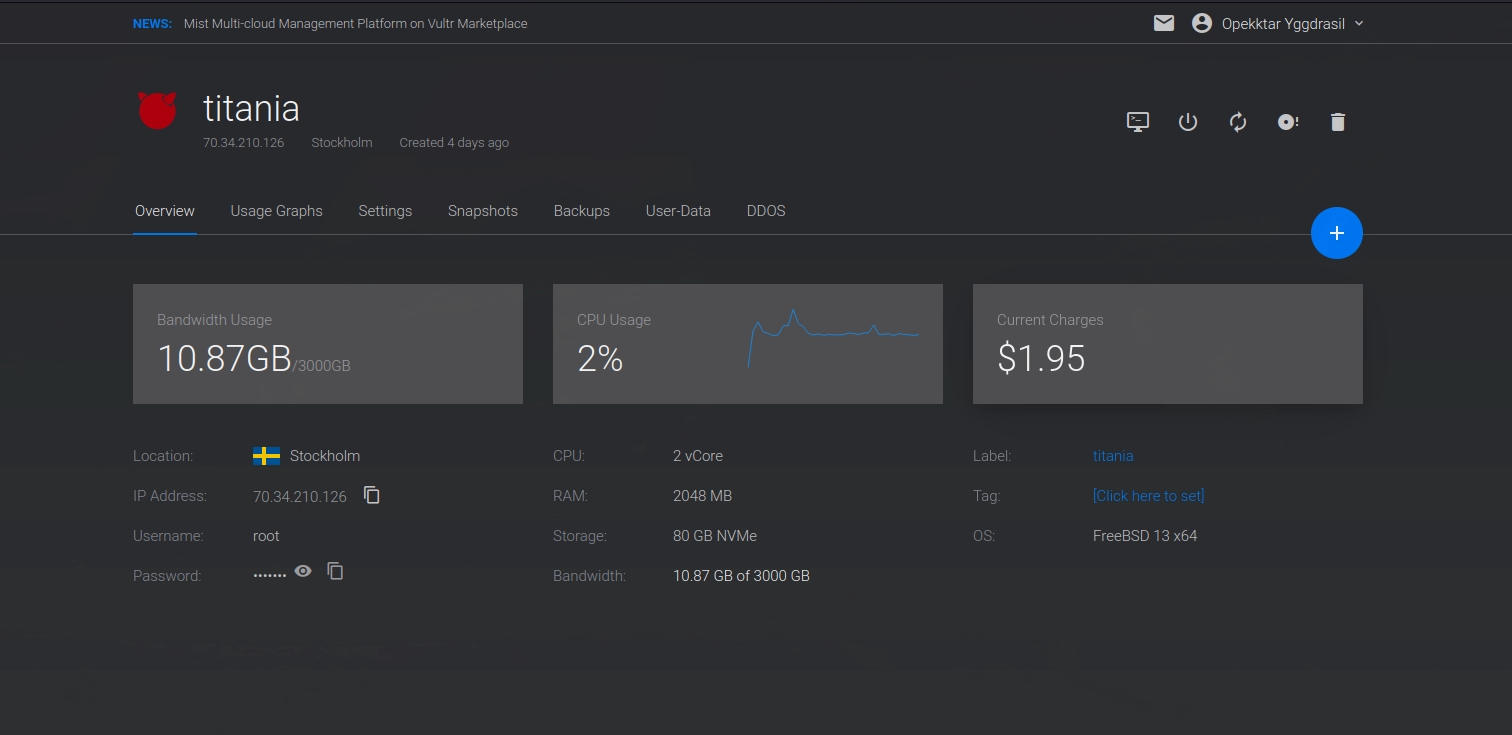

I will create docs and post on how I setup the database jail and NextCloud© jail in future installments. My goal here was to just get things moved off a host instance and into jails and consolidate things a bit as I use Cox Communications at home for internet and they are notoriously slow on upload speeds, so that really slowed down my NextCloud service. The server at home is awesome having 72GB of ram and 8TB of mirrored ZFS disk. But all the power in the world does you no good if your internet provider throttles your upload to less than 6 Mbs. (That’s not a typo) Here is a word of advice; never run an OONI probe1 on your internet provider in case they hold grudges. All stories for another day. And with an 80G 128G NVMe, I think I’m good for a while as I mostly just use CalDAV and WebDAV services. My photos still reside on Google© for now. That will be another project to document in the future.

So far I pretty happy with Vultr©, my configuration thus far and the performance; yes I need to compress some images and convert to .webp and there are some tweaks I could make elsewhere I’m sure. But things are looking good so far.

I know everything below is in the docs section as well: But how else can I convey my excitement when I finished the migration and ……………………..

At this point I say “Hold onto your butts” and reboot the VPS instance.

Within about a second I browse to rkey.tech, rkey.online and nc.rkey.tech and all 3 sites were up and operational. I then ssh into the host

ssh -p 5000 titaniaand then ssh into the jailssh caddyand this all worked as well.

Then I jumped out of my char and screamed “Holy fucking Shit!!!” Yeah, I was surprised it worked. There were a lot of moving pieces and not shutting down before taring up a jail, hell using tar instead of bastille export for that matter. So many configs to make typos on. Yes I’m a happy camper :)

-

Born in 2012, the Open Observatory of Network Interference (OONI) is a non-profit free software project that aims to empower decentralized efforts in documenting internet censorship around the world. ↩︎

Creating Vultr Instance

Matching blog post to Creating Vultr Instance @ RKey.Tech/Docs/

Creating instance at Vultr & setting up DNS

I initially created a “High Frequency” instance running FreeBSD 13 with 32GB NVMe, 1 vCores, 1G ram and 1GB bandwidth For testing. But my testing quickly became live and so I upgraded to 2 vCores, 80GB NVMe, 2GB RAM and 3 GB bandwidth.

Before the upgrade, I migrated my test only domain bootstraps.tech over from Digital Ocean to Vultr. My domains are over at HostGator, so moving the domains simply meant that I created DNS entries for boostraps.tech, opekkt.tech and opekktar.online at Vultr and then point HostGator from DigitalOcean to Vultr. I use ProtonMail for my mail servers so I have to plug that into those DNS entries as well. One thing I see missing on many web sites is CAA entries. I always add CAA entries to my DNS configuration.

I use a Caddy server that auto-magically takes care of my SSL certs for me. I like lazy solutions that work.

When Vultr© sets up initial DNS entry you select the instance to point it to and then Vultr creates a wild card ‘*’ entry. I initially did not like this solution because,,,,,,,,Well I don’t know why, but I’m finding I like the solutions as it only requires I create a Caddy entry in my web server and then I’m done. Again the lazy solution thing.

Before changing the DNS pointers I need to migrate my Caddy© server at Digital Ocean (DO) over to Vultr.

At DO I had a mixed setup I had a Caddy server that ran on the host, not in a jail breaking all my own rules. Then I had another server running only jails, which consisted of a WIKI and a couple of Ghost blogs. Everything else was just files in a directory on the host running the Caddy server. So my initial migration was just from an instance on Digital Ocean to Vultr running at the host level. Since I deploy static web sites via rsync from my Archlabs Fedora workstation at home I simply added the new host to my script and deployed to both instances for rkey.tech and rkey.online

Of course prior to synching things over and with any new VPS I generally do two things first.

- I turn off password login in ssh. Since I keep my public ID at DO and Vultr© and Linode© for that matter. when I create a VPS the first thing I do is add a new user, then I rsync my keys over from root that the VPS provider added when I created the instance to my user account.

Note in the document section there is no slash on .ssh but there is at the end of user/ The is not necessary but a habit of mine. When syncing directories if you leave off the trailing / then the whole directory gets synced. Since my user does not have an .ssh directory yet I leave off the slash. The / at the end of user/ is more about habits in this particular case. It does not need to be there. But when you have backed up terabytes of data to a server and forgot the slash on either end, you tend to be fussy about such things.

-

I make sure the new user can su to root. Linux is a mess sometimes adding to

wheelworks and sometimes there not even awheelgroup so you have to add tosudoersand then modify the sudoers file viavisudogenerally Linux has some lame editor like Nano and I screw everything up usingvikeys. So if I’m only doing things once I usually justvithe file. On BSD I just make sure the user belongs to wheel when creating the account and then I usually installdoas, and configure/usr/local/etc/doas.confhonestly just for muscle memory I usually installopendoason my Linux boxes as well. Mydoas.conffile is super freaking simple. -

After verifying that my user can ssh in. I turn off password authentication and root user login

Most VPS providers already have PasswordAuthentication no unless you do not have your keys on site or do not select to add during creation. Of course until you create a user and verify you can login you will most certainly find that PermitRootLogin is yes since the default is now no. You could just comment it out but I like the feel of an explicit statement in a config that affects security. It’s a mental thing for me.

My Caddy configuration on the host is just running a file server for now so the entries are very basic. Because I can not for the life of me figure out why the “Congo” theme does not follow basic Hugo rules for inserting images into markdown documents. I have a catch all entry for two things. One is a fake 404, which is just a redirect for funsies and gamesies and the other is a place to put my images in documents, which is actually a good thing now that I think of it because it saves drive space when I re-use images. Funny how problems become solutions.

Solved (I told you it would be stupid simple, I omitted the full path) For images there is a img directory under wtf that I just point my pages to. So instead of  which should work but does not; I have  If anyone knows why having the image in a Hugo bundle block does not work on Congo, please feel free to let me know. I’m sure it’s something stupid simple.

My other web sites are just directories named after the web site so rkey.tech for this site and r0bwk3y.comrkey.online for my personal site. So far it’s all just child’s play and pretty boring stuff even for the process of migrating VPS providers and sites over. I even made sure my deploy scripts deployed to DO and Vultr so when the DNS hit with the new site everything was in sync and up to date. For this migration process. I did not even have to shut down my monitors.

Later on when I migrated NextCloud from a home server to the cloud and even migrated my Caddy to a jail, things did not feel as much like child’s play. Nor did I do so without taking hits on my up-time monitor.

Yes, Yes, I know the status page says 100% as of today 8-March-22. But it was a migration and I should have paused the monitors anyway. So I feel justified in resetting the counters :)